Part of my series of notes from ICLR 2019 in New Orleans.

These are talks (and panels) from the Safe ML Workshop.

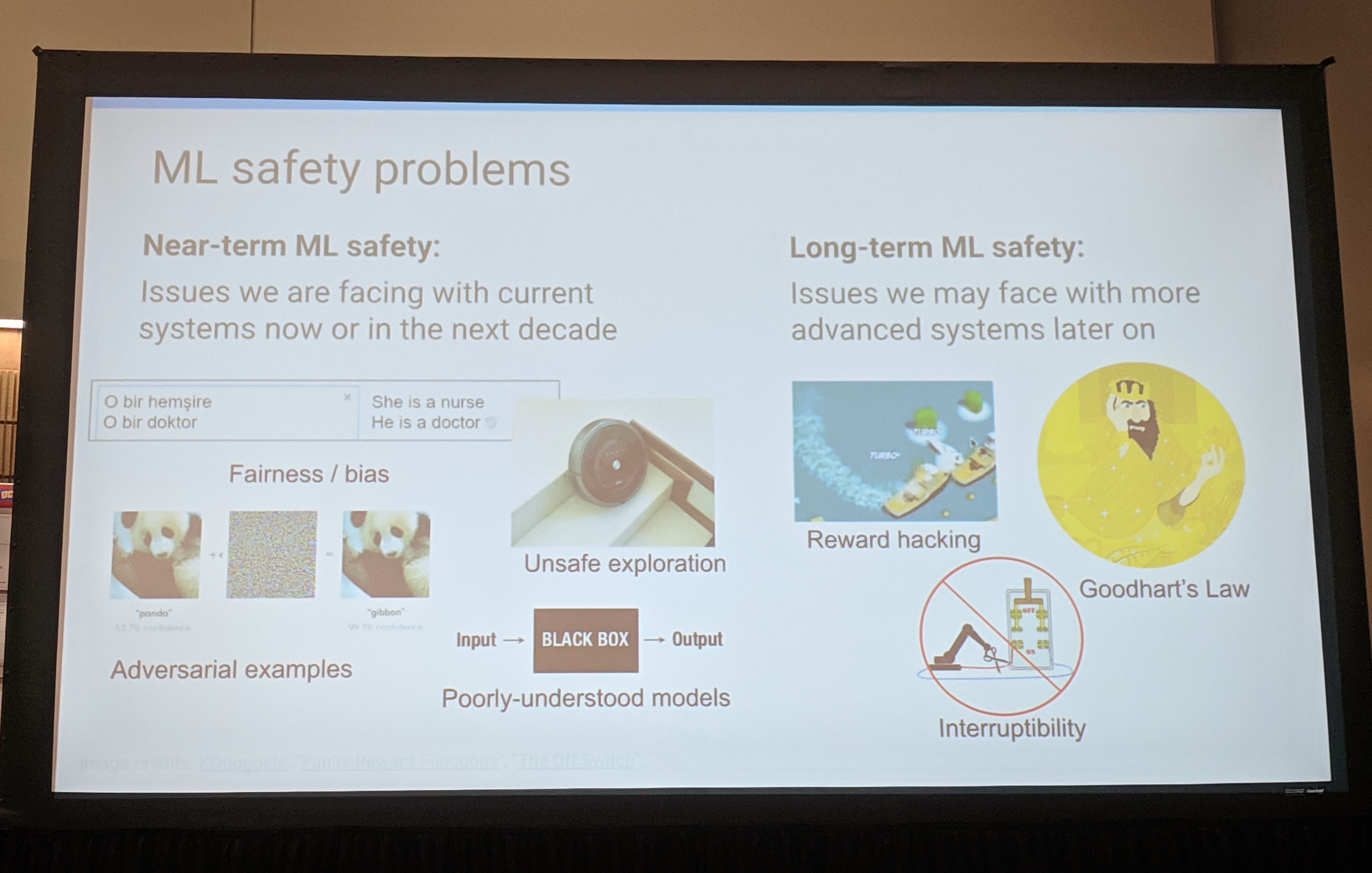

Introduction

- ensure ML algorithms

- do what they’re supposed to

- avoid causing harm

- have positive impact

Cynthia Rudin: Interpretability for Important Problems

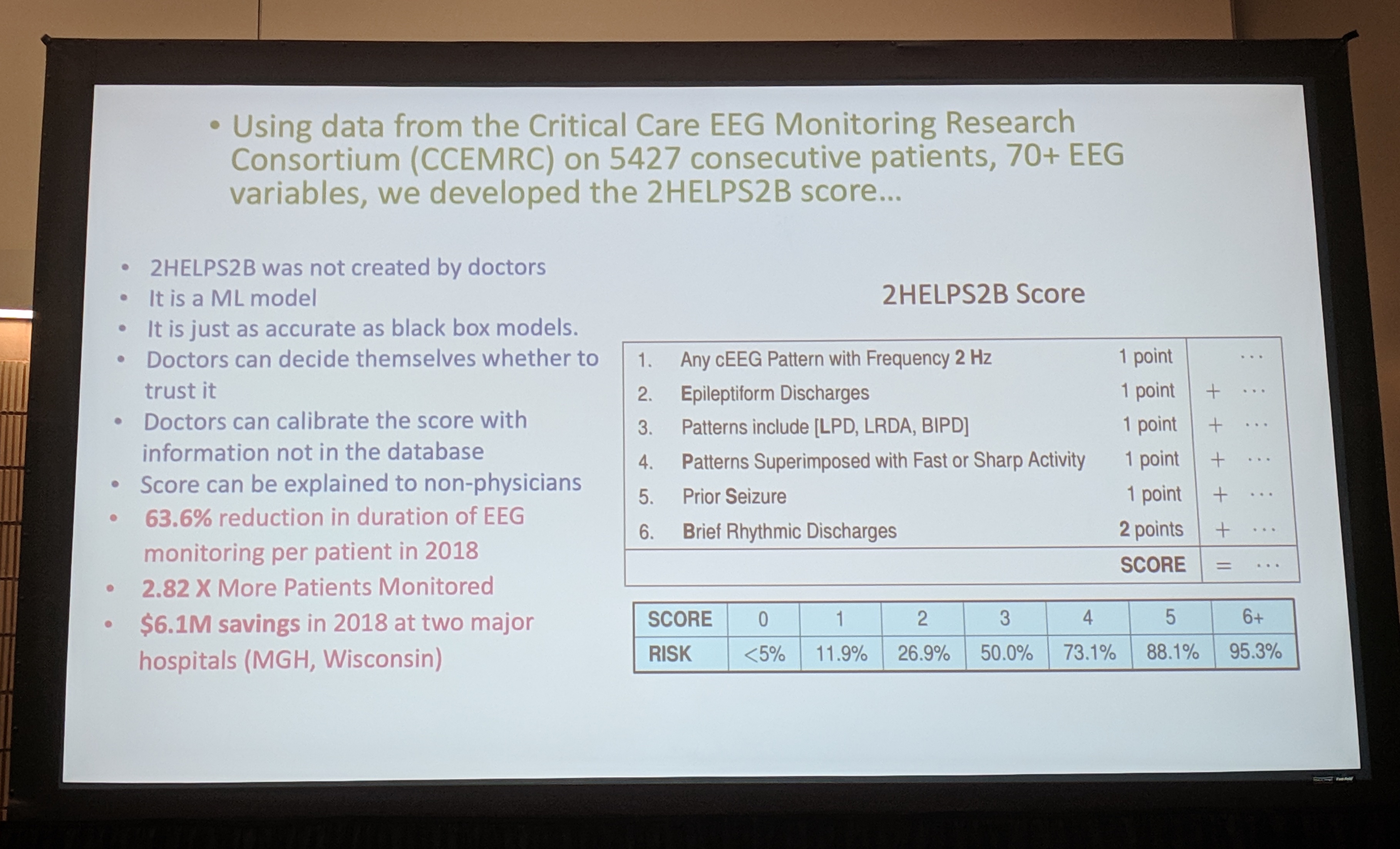

- example: EEG used to monitor seizures

- expensive and not well-utilized where needs to be

- need seizure prediction

- => 2HELPS2B score – ML model but totally interpretable, medically validated

- sparse linear model with integer coefficients

- add constraints to improve interpretability while retaining accuracy

-

Rashomon set – the set of good (equally accurate) models is fairly big, so there’s probably an interpretable model in here

- (I assume this is named after this movie, which you should definitely watch if you haven’t already!)

- explainability (posthoc) vs. interpretability (no black boxes to start with)

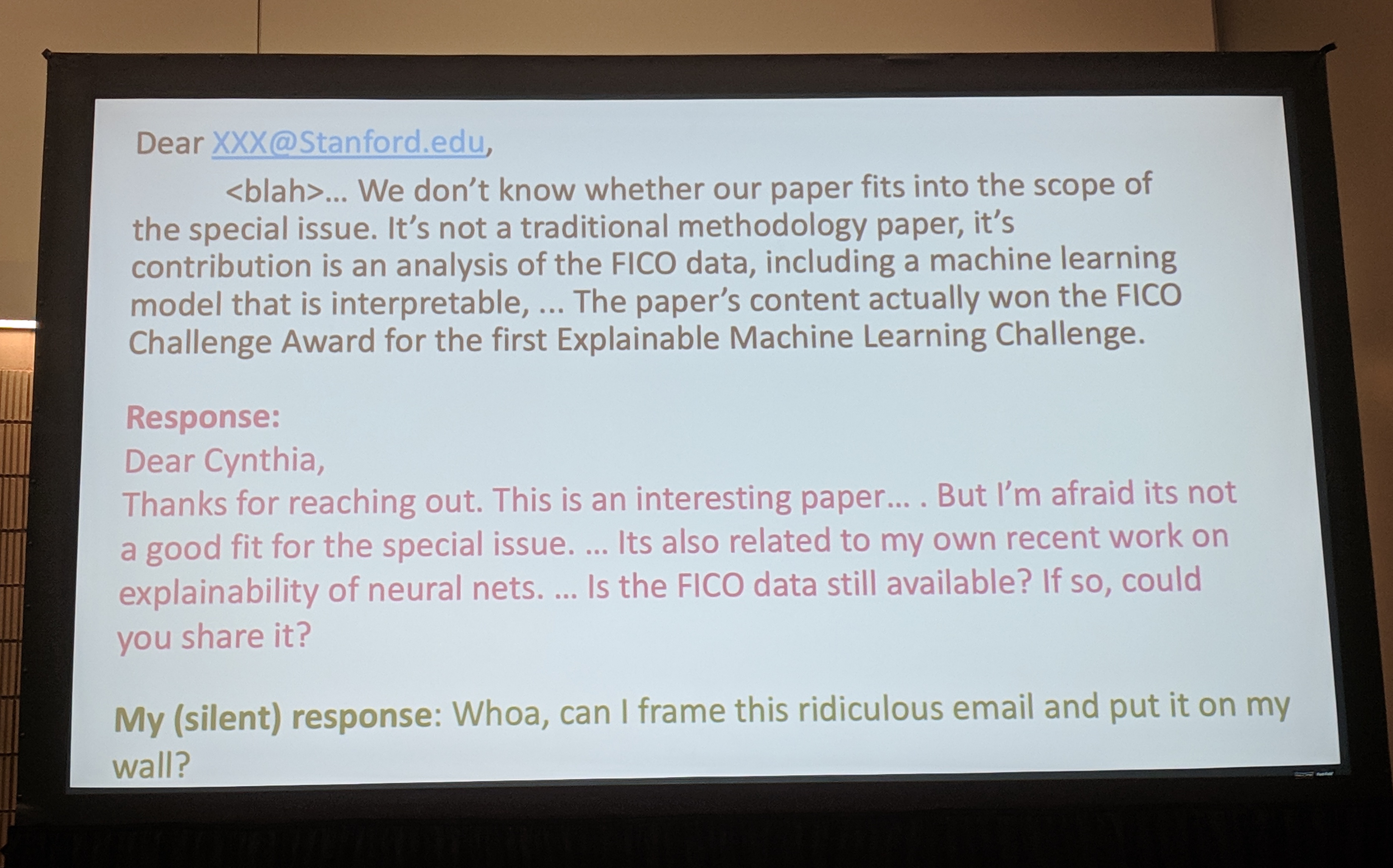

- a hilarious FICO competition story…

- sometimes you really don’t need black boxes

- sometimes you do though, e.g. computer vision

-

This Looks Like That (Chen et al. 2018)

- case-based reasoning – “K-nearest parts of prototypical cases”

- learn prototypes, similarity scores, class connection weights

-

Risk-SLIM (Ustin & Rudin 2017)

- bad, bad objective (complexity theory-wise)

- lattice cutting plane algorithm – adapted to work for mixed-integer program

- takeaway: training these kinds of models is difficult, but doesn’t require sacrificing accuracy

- if you’re working on something important, maybe it’s worth it

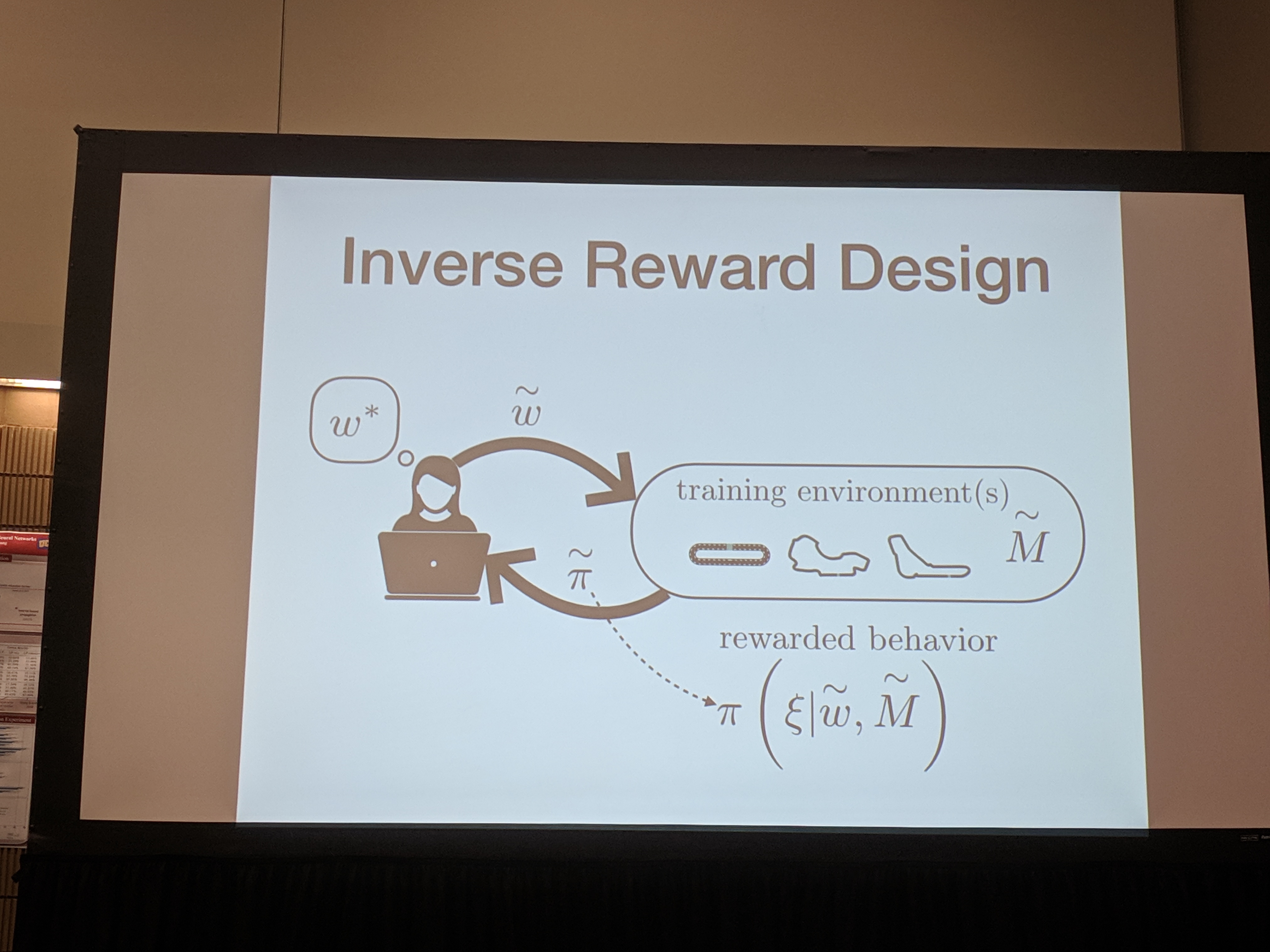

Dylan Hadfield-Menell: Formalizing the Value Alignment Problem in AI

- how models figure out what you want

- Faulty Reward Design – intended vs. actual environment when designing reward functions

- Bayesian approach to express uncertainty about unknown states

- => Inverse Reward Design – provide proxy reward function + training environments

- more generally: cooperative inverse reinforcement learning (paper)

- robot chooses action, human chooses objective function

- computationally difficult though

(decentralized POMDP)

(decentralized POMDP) - can show it’s actually slightly more tractable than it seems initially

-

Assistive Multi-Armed Bandit (paper)

- how to help people who (initially) don’t know what they want?

- learning vs. assisting strategies

- are there assisting strategies that can actually help humans learn?

- e.g. make an inconsistent learner consistent

- example: greedy strategy

- human picks best action seen so far (exploit)

- robot ensures human explores

- “noisy-greedy-in-the-limit” – very weak sufficient condition for when assisting can help

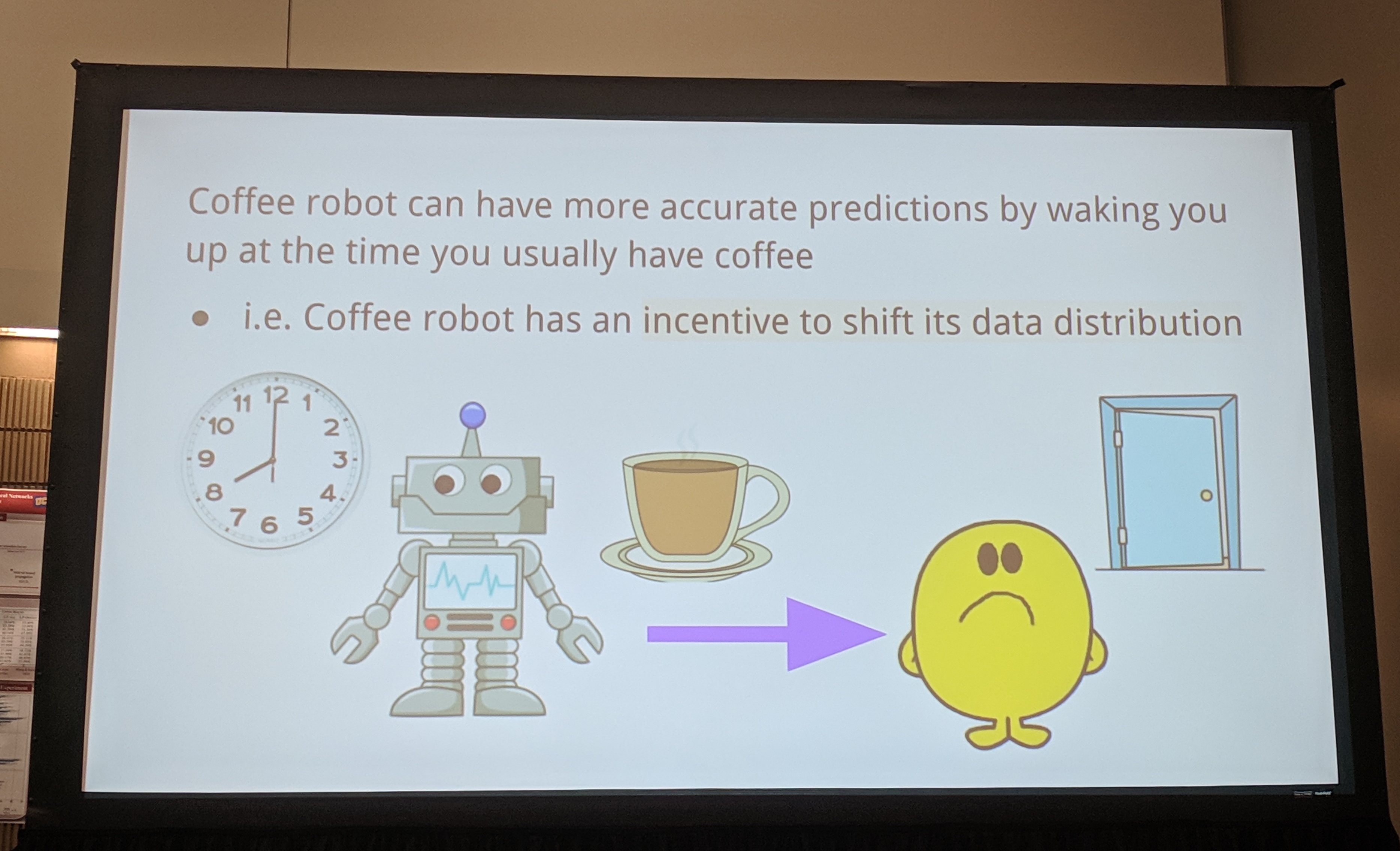

David Krueger: Misleading meta-objectives and hidden incentives for distributional shift

- distributional shift – train/test distributions are different

- what learners “want” – objective function tells us the ends, but the means are also important

-

Self-Induced Distributional Shift (SIDS)

- algorithms don’t model their own effects on the world

-

Hidden Incentives for Distributional Shift (HIDS)

- causes models to “cheat”

- look out for HIDS!!!

- naive specification of objective creates hidden incentive to shift the distribution

- unit test (!) for HIDS using meta-learning

- HIDS mitigation strategy: environment swapping

- rewards from your actions go to another agent

- why do we care?

- unknown unknowns

- feedback loops in content recommendation

- don’t want robots taking over the world to optimize their objective function

Panel Number One

- analogous problems might still have different solutions

- e.g. AI systems & capitalism both min/max objectives with potentially unknown side effects

- but what you do about them might be very different

- companies design systems that they are not subject to

- e.g. criminal justice

- incentives not aligned

- think about why pro-active regulation isn’t happening

- humans aren’t good at pro-activity… but right now AI has pretty much nothing

- ML researchers can help but probably can’t solve it alone, needs imput from ethicists, social scientists, etc.

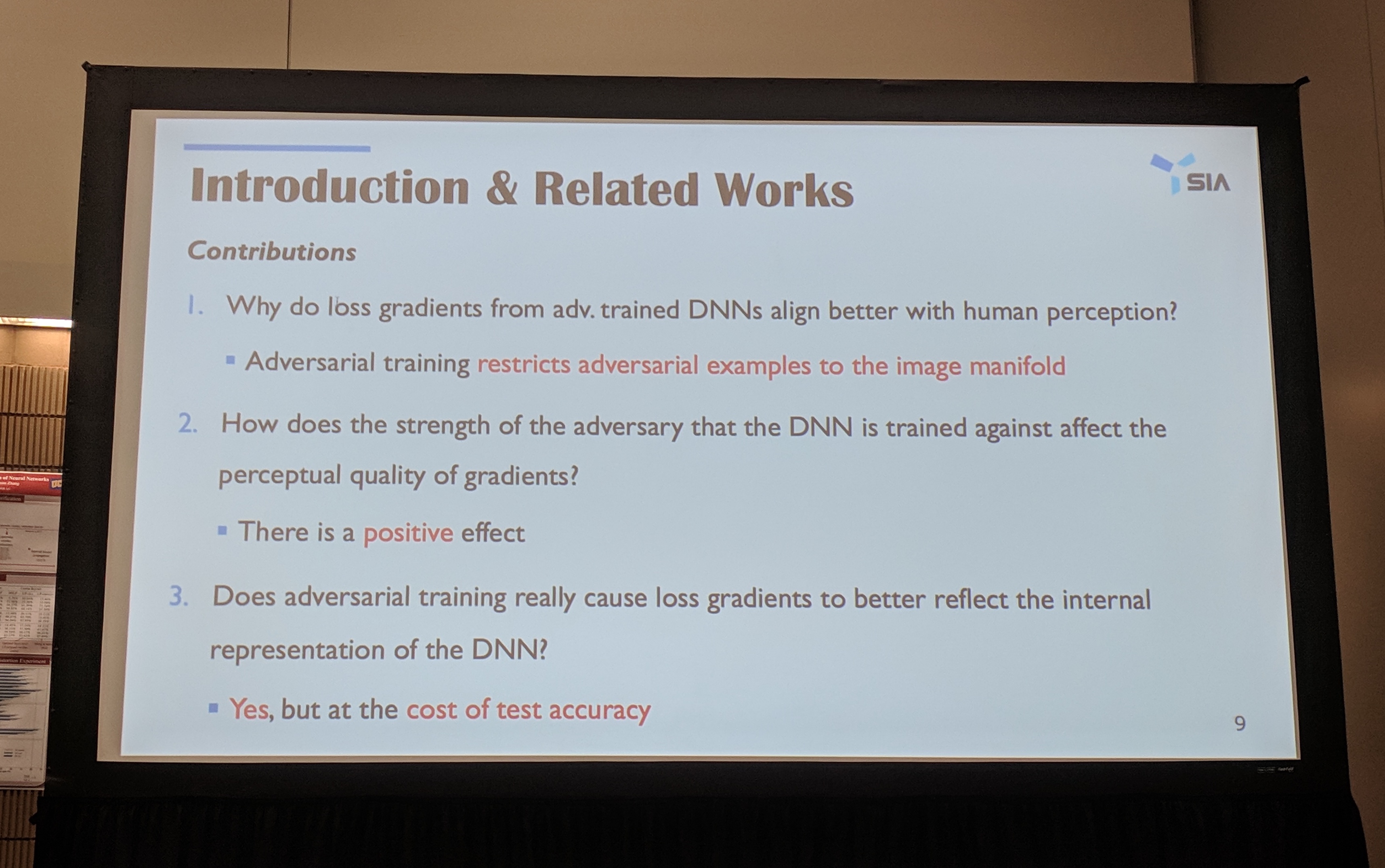

Beomsu Kim: Bridging Adversarial Robustness and Gradient Interpretability

- adversarial training causes loss gradients to be visually interpretable

- not necessarily better descriptions of internal representations though

- restricts adversarial examples to image manifold

- stronger adversary => adversarial examples look more natural

- hypothesis that decision boundary tilting along low variance directions causes existence of adversarial examples

- adversarial training prevents decision boundary from tilting

- quantitative interpretability

- (missed how they’re defining this?)

- tradeoff between accuracy and gradient interpretability

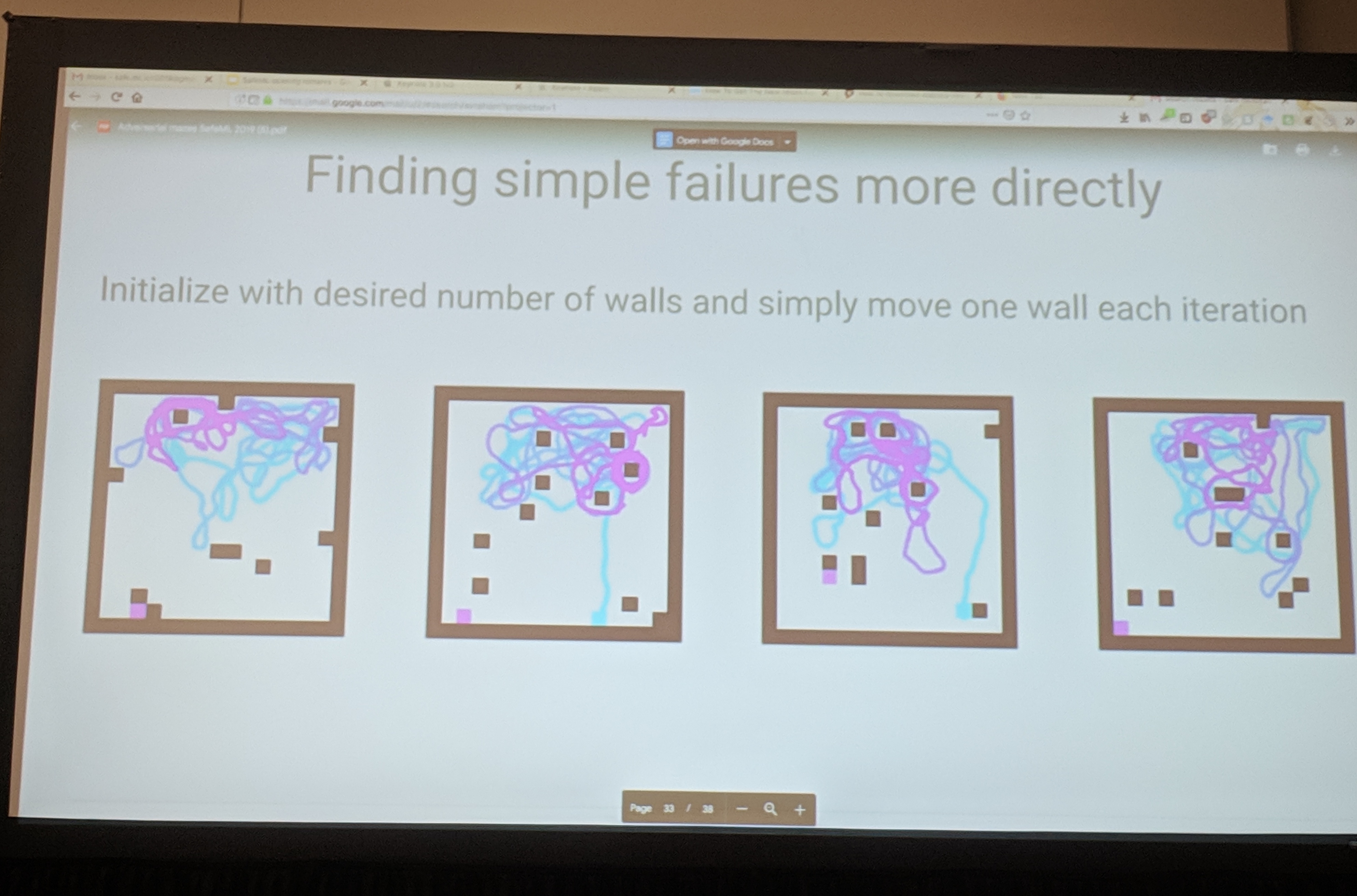

Avraham Ruderman: Uncovering Surprising Behaviors in Reinforcement Learning via Worst-Case Analysis

- evaluating RL

- outside training disribution

- worst-case rather than average

- examples with mazes + ones with walls randomly moved/removed

- local search to find worst maze ever, even ones with very sparse wall structure

- rare but simple mazes

- humans do just fine on them… some of these algorithm failures are quite amusing

- some transfer across agents

- finding failure examples is much less efficient than for supervised learning

- can failure cases help us understand what causes failure?

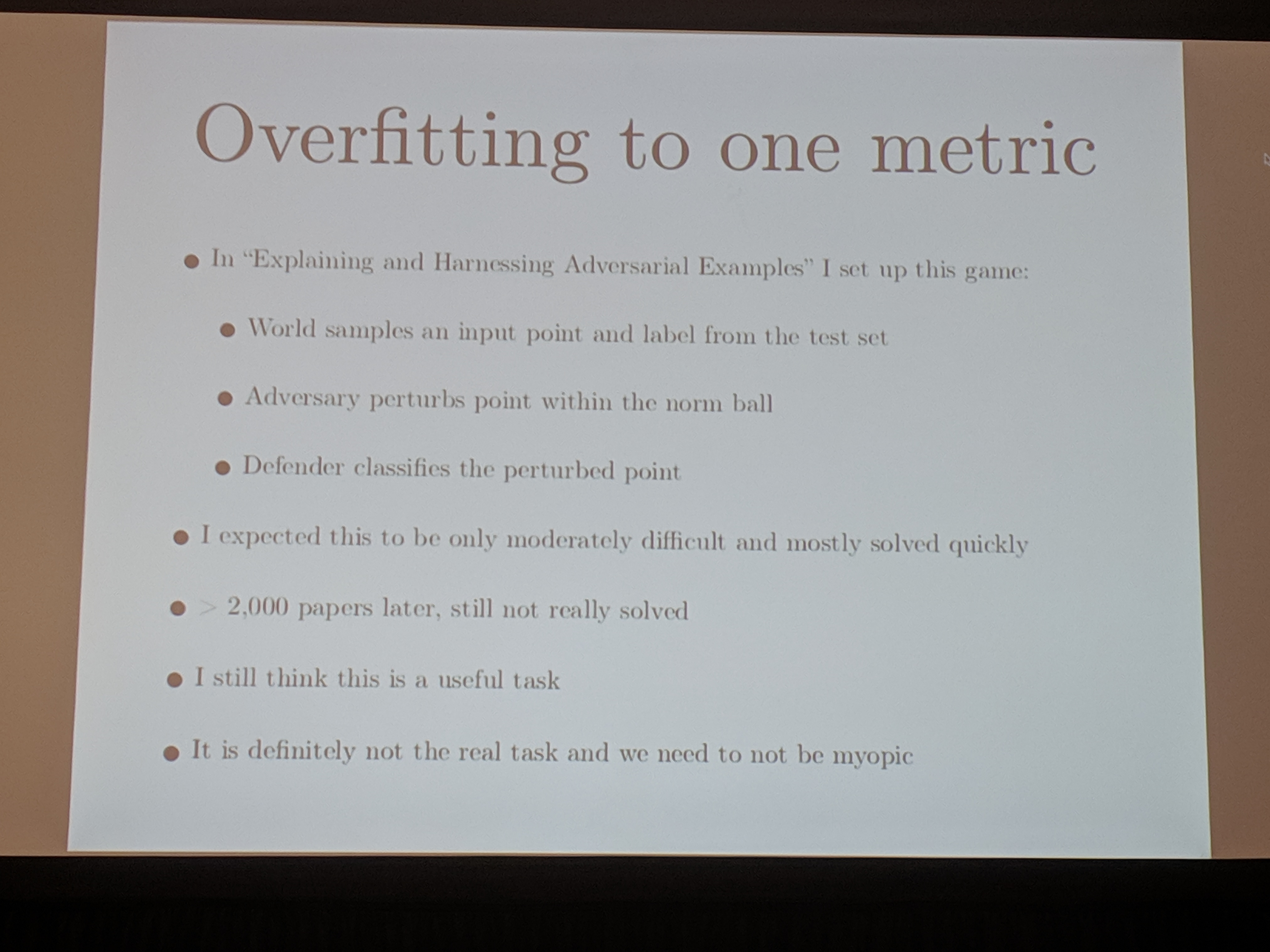

Ian Goodfellow: The case for dynamic defenses against adversarial examples

- based on this

- adversarial examples: anything that is designed to mess with a model

- not just imperceptible, etc.

- the research community is overfitting to the problem he proposed 5 years ago…

- i.e. small norm ball perturbations model of adversarial examples

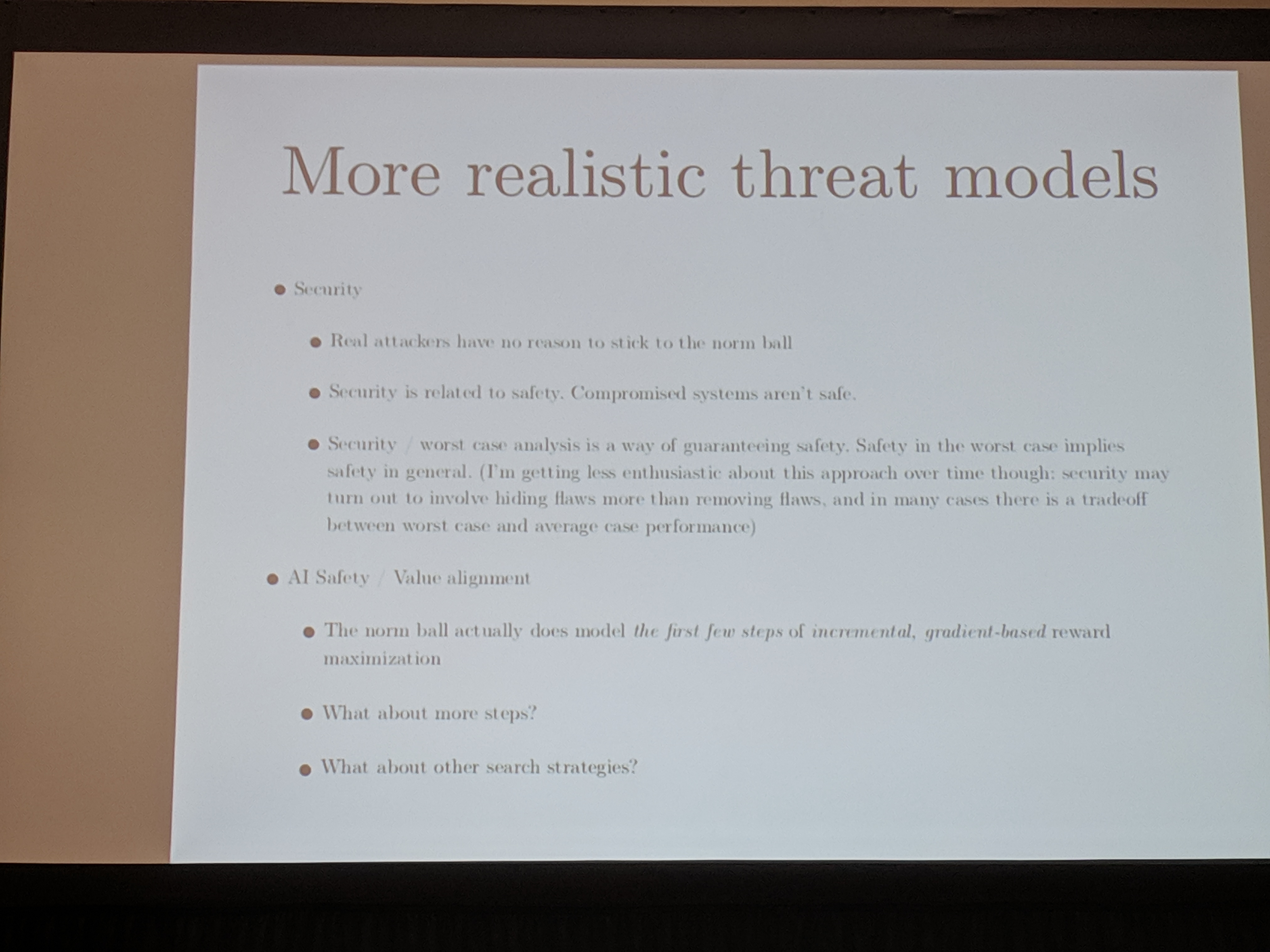

- need more realistic threat models

- no reason for attackers to stick to the norm ball

- value alignment – this attack corresponds to only first few steps of optimisation

- only focusing on starting points within test set and perturbing

- “expectimax”

- still not really solved after 2000 papers, but maybe some people should be moving on

- what about “true max”?

- test set attack (Gilmer et al. 2018) – find errors in the test set and repeat them forever

- as long as failure rate r ≠ 0, this attack works

- adversarial training improves accuracy on adversarial set but tends to decrease accuracy on natural test set

-

r = 0 will never happen (except for simple tasks), so can’t defeat the test set attack

- btw humans also have non-zero failure rate, so for once humans don’t give us an existence proof

- deterministic defenses just don’t work

- what about stochastic?

- model still can’t be perfect, so still a failure rate

- what about abstention?

- still just reducing r, still not perfect (m+1 classes instead of m)

- so… what about dynamic?

- breaks train / infer division

- requires behaviour that changes after deployment

- very scary

- but almost certainly necessary for true defense

- breaks train / infer division

- side effect: if you believe dynamic defense is necessary, then making models less flaky also makes things more secure

- “memorization” defense (with or without abstention)

- existence of dynamic defense that outperforms fixed defenses on test set attack

- dynamic is necessary, probably not sufficient

- question: does dynamic defense always need an oracle?

- hard to imagine what it looks like without…

Panel Number Two

- how do we get good specifications to then optimize?

- they’re not just handed down from god…

- “One thing that will get easier is convincing people of the importance of AI safety research” (Goodfellow)

- make AIs that make the same mistakes as humans?

- dynamic proposal: can’t make a perfect system, so should make mistakes that aren’t predictable rather than modeling them on humans

- there isn’t even a ground truth for most tasks, so of course AI systems will make mistakes

- AI safety: how do you deal with the fact that humans are suboptimal?

- think about how safety works in other fields of computer science

- e.g. Byzantine fault tolerance

- Goodfellow wants less crappy adversarial example papers

Above: Goodfellow, sick and tired of crappy adversarial example papers (I’m just kidding, he actually always looks like this).